Authoring

Explore my academic publications, technical articles, and written content.

Read

High-performance image generation using Stable Diffusion in KerasCV

2022-09-01

Generate new images using KerasCV's StableDiffusion model.

machine-learning

Read

Teach StableDiffusion new concepts via Textual Inversion

2022-11-15

Learning new visual concepts with KerasCV's StableDiffusion implementation.

machine-learninggenerative-ai

Read

Marine Animal Object Detection with KerasCV

2022-12-10

Use deep learning to detect marine animals including sharks,

turtles, penguins, puffins, jellyfish and more!

machine-learningobject-detection

Read

Efficient Graph-Friendly COCO Metric Computation for Train-Time Model Evaluation

2022-07-25

Pre-print written alongside Francois Chollet on

a novel algorithm to closely approximate Mean Average Precision within the

constraints of the TensorFlow graph.

The algorithm used in the publication is used in the KerasCV COCO metric

implementation, and can be used to perform train time evaluation with any

KerasCV object detection model.

machine-learningopen-source

D

Read

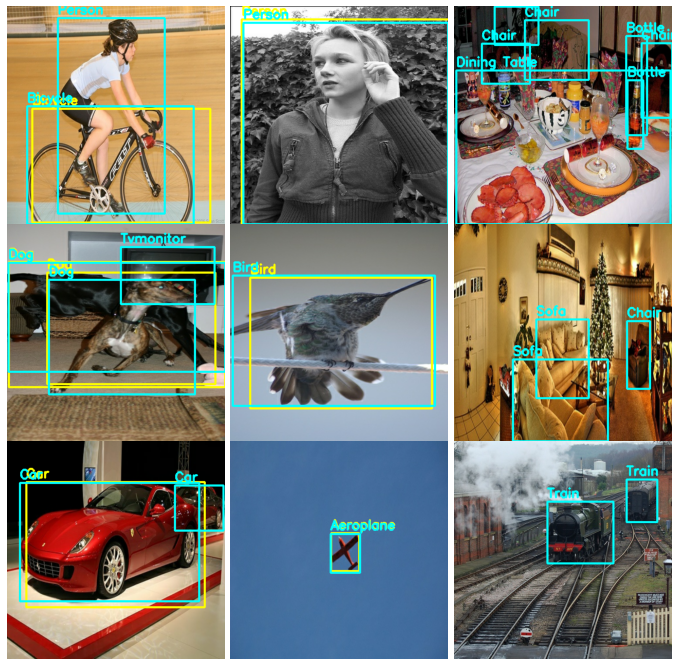

Deep Learning Object Detection Approaches to Signal Identification

2022-10-16

Pre-print written for ICASSP 2022. We decided not to continue

this line of work, but our results and our spectrogram object detection

dataset are open source and available on GitHub.

If you'd like to finish this work and attempt to get it published, feel free

to reach out.

machine-learningobject-detection

Read

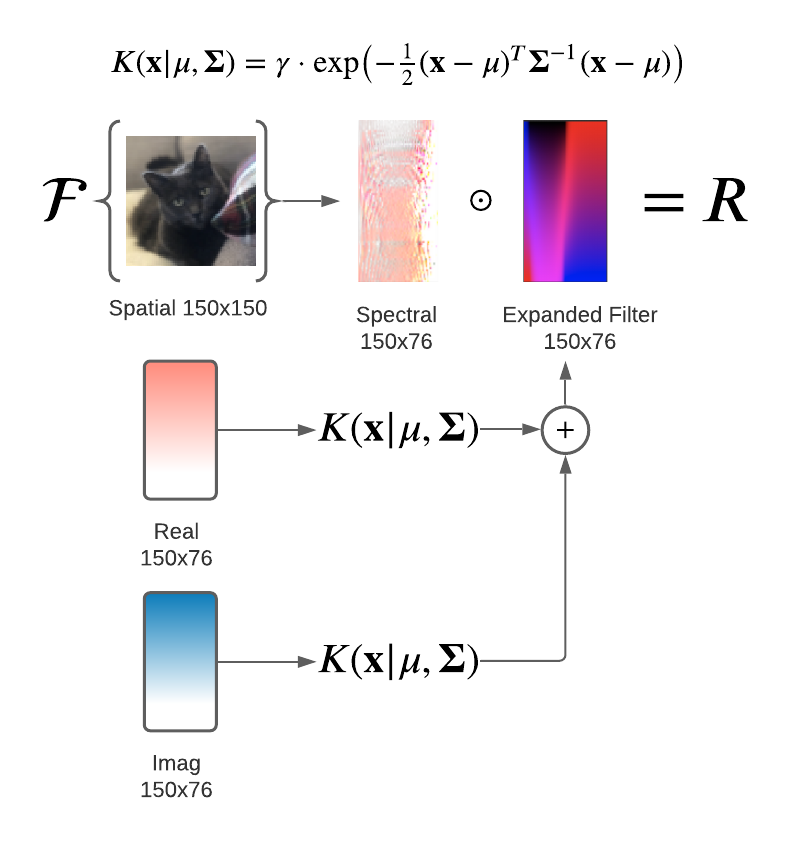

Parametric Spectral Filters for Fast Converging, Scalable Convolutional Neural Networks

2021-06-01

Primary author of 2021 ICASSP Publication: Parametric Spectral

Filters for Fast Converging, Scalable Convolutional Neural Networks.

Please see GitHub repo for full information.

machine-learning