- Published on

The Definitive Guide to Object Detection

- Authors

- Name

- Luke Wood

The goal of this guide is to teach someone who knows the basic of machine learning how to train an extremely powerful object detection model from scratch. This guide explains a wide variety of techniques and gives some theoretical backing as to why they are effective. By the end of this guide you'll be able to train a powerful object detection model on your own custom datasets.

Throughout this guide we'll use the KerasCV library, but the concepts here are general and can be applied in any deep learning library. There are a lot of great object detection resources out there, notably the YOLO repos, but none were designed with modularity in mind. They're all-in-one solutions for object detection. Full disclosure, I'm pretty biased and mainly the KerasCV object detection = library because I wrote the majority of it.

KerasCV offers a complete set of production grade APIs to solve object detection problems. These APIs include object-detection-specific data augmentation techniques, Keras native COCO metrics, bounding box format conversion utilities, visualization tools, pretrained object detection models, and everything you need to train your own state of the art object detection models.

We'll train our models in JAX, which anecdotally improves train times by around ~30% when training a non-data throttled setting.

import os

os.environ["KERAS_BACKEND"] = "jax"

from tensorflow import data as tf_data

import tensorflow_datasets as tfds

import keras

import keras_cv

import numpy as np

from keras_cv import bounding_box

import os

from keras_cv import visualization

import tqdm

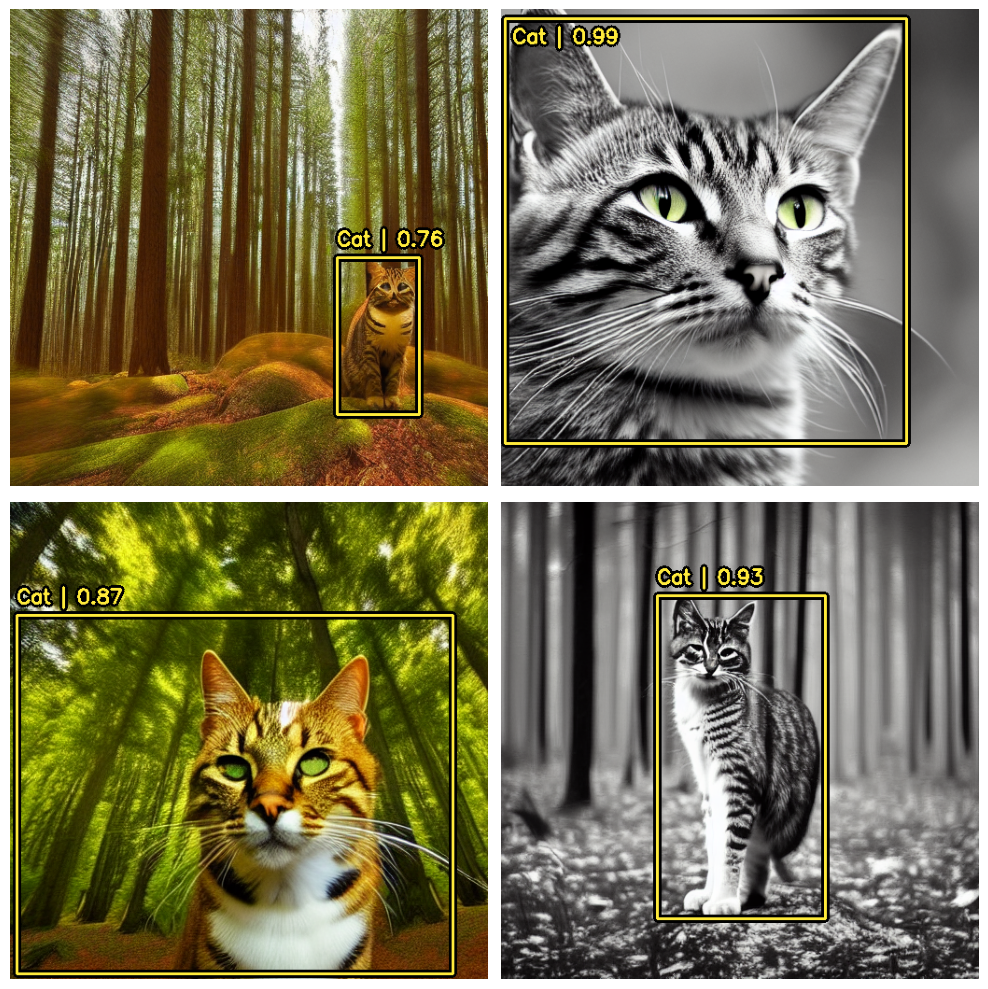

Object detection introduction

Object detection is the process of identifying, classifying, and localizing objects within a given image. Typically, your inputs are images, and your labels are bounding boxes with optional class labels. Object detection can be thought of as an extension of classification, however instead of one class label for the image, you must detect and localize an arbitrary number of classes.

For example:

The data for the above image may look something like this:

image = [height, width, 3]

bounding_boxes = {

"classes": [0], # 0 is an arbitrary class ID representing "cat"

"boxes": [[0.25, 0.4, .15, .1]]

# bounding box is in "rel_xywh" format

# so 0.25 represents the start of the bounding box 25% of

# the way across the image.

# The .15 represents that the width is 15% of the image width.

}

Since the inception of You Only Look Once (aka YOLO), object detection has primarily been solved using deep learning. Most deep learning architectures do this by cleverly framing the object detection problem as a combination of many small classification problems and many regression problems.

More specifically, this is done by generating many anchor boxes of varying shapes and sizes across the input images and assigning them each a class label, as well as x, y, width and height offsets. The model is trained to predict the class labels of each box, as well as the x, y, width, and height offsets of each box that is predicted to be an object.

Visualization of some sample anchor boxes:

Objection detection is a technically complex problem but luckily we offer a bulletproof approach to getting great results. Let's do this.

Perform detections with a pretrained model

The highest level API in the KerasCV Object Detection API is the keras_cv.models API. This API includes fully pretrained object detection models, such as keras_cv.models.YOLOV8Detector.

Let's get started by constructing a YOLOV8Detector pretrained on the pascalvoc dataset.

pretrained_model = keras_cv.models.YOLOV8Detector.from_preset(

"yolo_v8_m_pascalvoc", bounding_box_format="xywh"

)

Notice the bounding_box_format argument?

Recall in the section above, the format of bounding boxes:

bounding_boxes = {

"classes": [num_boxes],

"boxes": [num_boxes, 4]

}

This argument describes exactly what format the values in the "boxes" field of the label dictionary take in your pipeline. For example, a box in xywh format with its top left corner at the coordinates (100, 100) with a width of 55 and a height of 70 would be represented by:

[100, 100, 55, 75]

or equivalently in xyxy format:

[100, 100, 155, 175]

While this may seem simple, it is a critical piece of the KerasCV object detection API! Every component that processes bounding boxes requires a bounding_box_format argument. You can read more about KerasCV bounding box formats in the API docs.

This is done because there is no one correct format for bounding boxes! Components in different pipelines expect different formats, and so by requiring them to be specified we ensure that our components remain readable, reusable, and clear. Box format conversion bugs are perhaps the most common bug surface in object detection pipelines - by requiring this parameter we mitigate against these bugs (especially when combining code from many sources).

Next let's load an image:

filepath = keras.utils.get_file(origin="https://i.imgur.com/gCNcJJI.jpg")

image = keras.utils.load_img(filepath)

image = np.array(image)

visualization.plot_image_gallery(

np.array([image]),

value_range=(0, 255),

rows=1,

cols=1,

scale=5,

)

Note: Keras pivoted to unifying KerasCV and KerasNLP into KerasHub. In the transition, someone re-wrote the object detection guide and the link broke, so I'm self hosting to make sure these guides are preserved.