Open Source Software

KerasCV

KerasCV is the official Keras repository of computer vision extensions to the Keras API (layers, metrics, losses, models, data-augmentation) that applied computer vision engineers can leverage to quickly assemble production-grade, state-of-the-art training and inference pipelines.

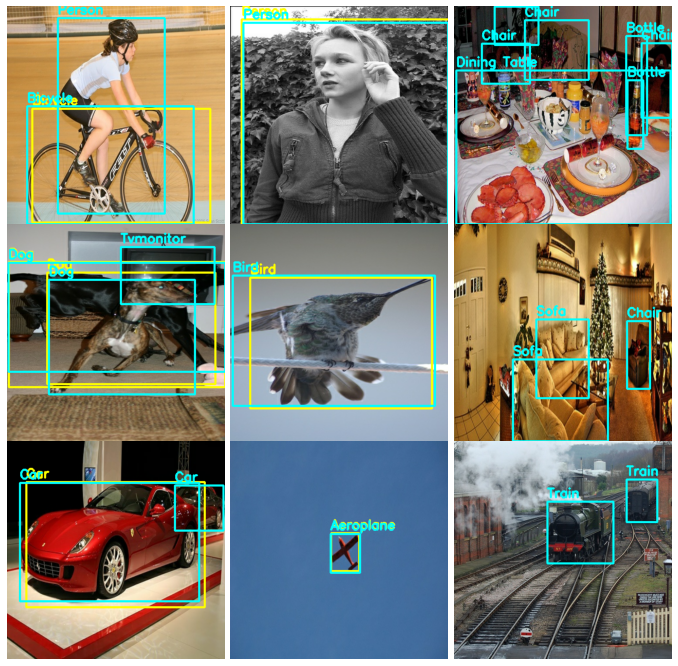

KerasCV Object Detection API

Designed and implemented the first official Keras object detection API. The API includes modular components for data augmentation, modelling, loss computation, and metric evaluation. Some API highlights include mitigation against silent failure through explicit bounding box format specification, and train time COCO metric evaluation - a unique feature to KerasCV enabled by the algorithm I designed in "Efficient Graph-Friendly COCO Metric Computation for Train-Time Model Evaluation".

Generative Modeling with the National Gallery of Art Open Data Program

Published PyPi package that loads a dataset of images from the National Gallery of Art Open Data Program into a tf.data.Dataset along with a companion generative modeling workflow to produce new images.

ReefNet

ReefNet is a RetinaNet implementation written in pure Keras developed to detect Crown-of-Thorns Starfish on the Great Barrier Reef. More information about the problem Crown-of-Thorns Starfish pose to the Great Barrier Reef as well as efforts to control their population can be found in our project write up.

8000Net

Luke co-authored the lecture material for a graduate machine learning course taught at Southern Methodist University by Dr. Eric C. Larsen. Luke co-authored the material on Neural Style Transfer, Multi Task Learning and Reinforcement Learning. The lecture material is primarily formatted as Jupyter Notebooks and is fully open source.

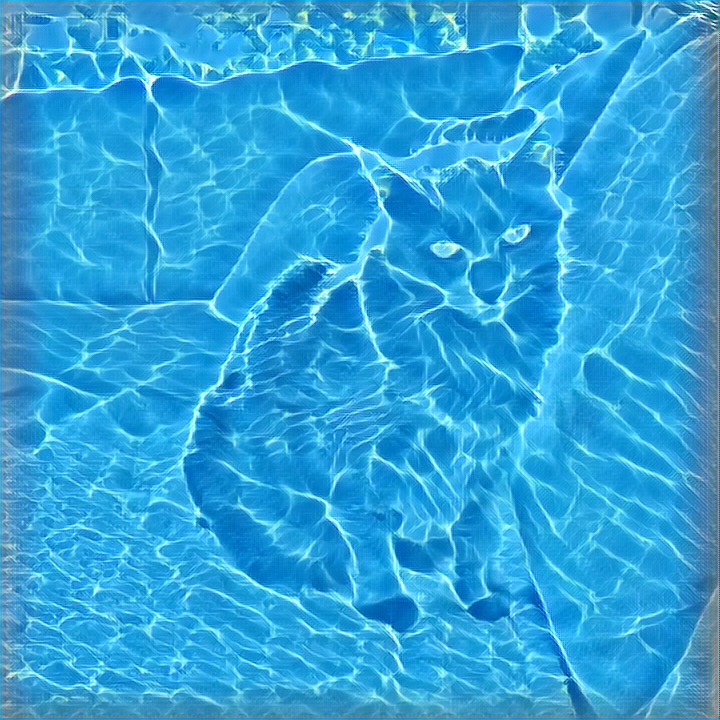

ImStyle.app

ImStyle performs Neural Style Transfer on your iOS device in real time. Our models are implemented with Tensorflow and trained on a Tesla P100 GPU for 8-16 hours. Our models are based on those proposed in Perceptual Losses for Real-Time Style Transfer and Super Resolution [Johnson et al.]. Following training, the models are transformed into CoreML models, allowing them to take advantage of the IPhoneX's machine learning inference hardware. This hardware accelaration allows for at least 30 FPS on an iPhoneX.

Reinforcement Learning Routing Environment

Reinforcement Learning routing environment. See README for more details.